Understanding Database Partitioning in CouchDB posted Wednesday, March 12, 2025 by The Neighbourhoodie Team TipCouchDB

CouchDB partitions optimise performance both with storage and queries. Find out how they work — and how to make the most of them.

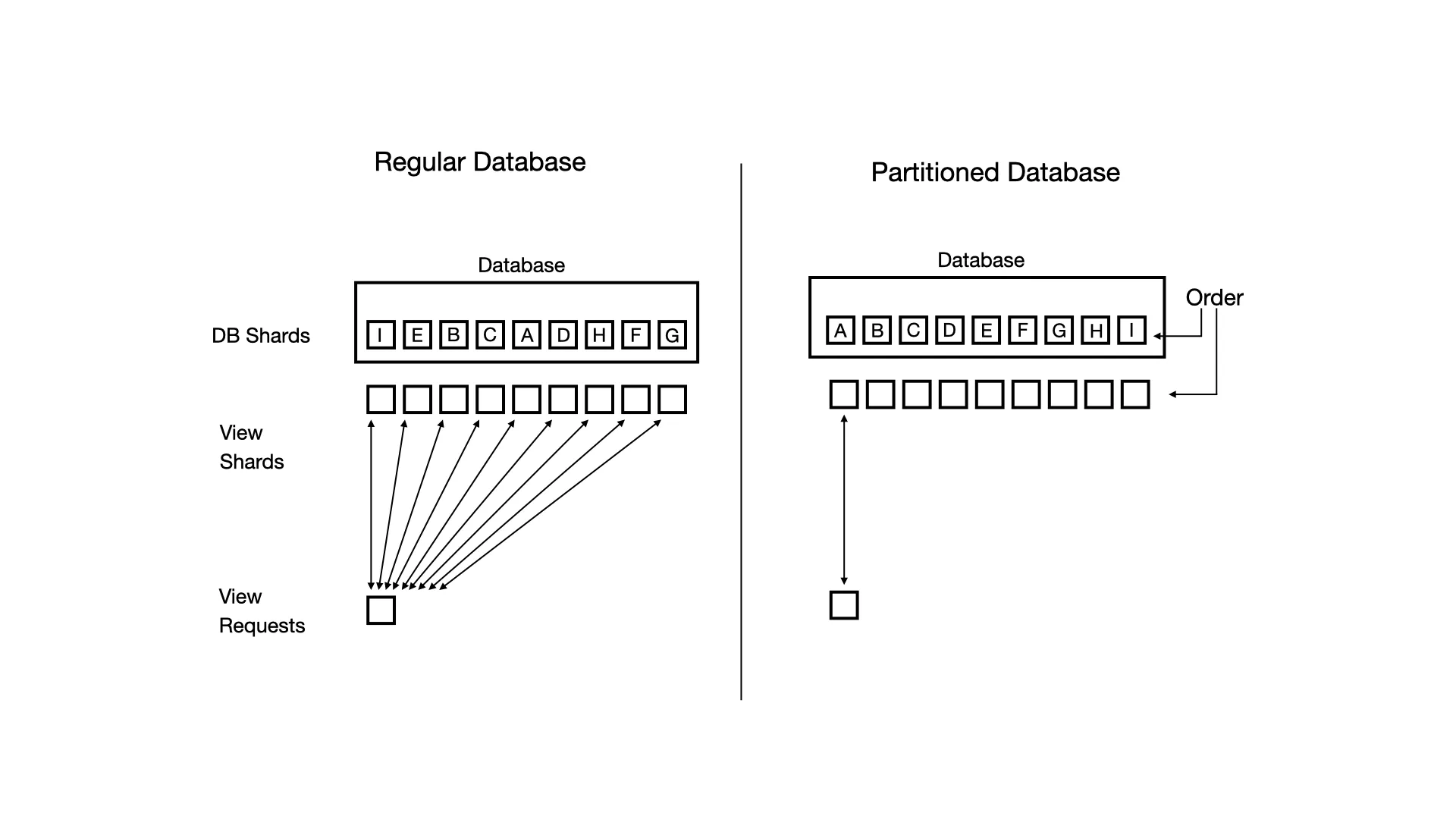

CouchDB version 3.0.0 introduced partitioned databases. Partitioned databases are a performance optimisation for a specific way of using CouchDB. To understand how it works, we’re briefly revisiting how sharding works in CouchDB.

Each database is separated into q partitions. The larger the database, the larger the q value is, i.e. the more shards you have. Each shard is responsible for replying to all queries for a specific range of document _ids. As a simplified example, in a q=4 database (4 shards):

- shard 1 takes care of all documents starting with ids from A–G

- shard two covers H-M

- shard three: N-T

- shard four: U-Z

But there is one more level of indirection: when writing a document to a database, CouchDB uses consistent hashing to determine which shard it goes on. So when looking at which range to write a document to, CouchDB does not look at the doc _id itself, but a hash of the doc _id.

This ensures that all shards are used equally. Say you have a lot of document _ids that start with the letter A, without consistent hashing your first shard holds most of the documents and as such needs to take care of most of the requests to that database. To make best use of all available computing resources, each shard should be roughly used by the same amount, hence the indirection via a consistent hash.

Now for database partitions. Say you want to get documents that belong to a user, maybe to list a messaging inbox. You already made it easy on yourself and prefixed all documents for a user with the user id, so all you need is to query _all_docs with a startkey/endkey combination that covers the user id, done.

What happens under the hood however is now CouchDB has to talk to all shards to find the documents that make up the result for this. That’s because even though the _ids for all docs share a common prefix, consistent hashing makes sure they are equally distributed across all shards.

So far so good. But now imagine you have a very large database, say q=16 or more. Now you have to talk to 16 shards to fetch the first page of your inbox display, say 25 to 50 documents total. And you have many many users doing this all the time. That is a lot of requests to a lot of shards to get only a few documents per request.

Partitioned databases were designed to optimise this precise use case. They allow you to revert the effects of consistent hashing by making sure that all documents with the same prefix are stored on the same shard. Now when getting a list of documents, CouchDB only needs to talk to a single shard to get the answer, instead of 16 shards.

Consistent hashing is still used to make sure that each prefix set is distributed equally across all shards for the same reasons outlined above.

To formalise the above scenario, so you can decide whether your use-case applies here as well, let’s look at the constraints of partitioned databases.

- The number of partitions must be high. Above we use the user id as the partition key. Having 1000s or 100000s of users is a good example of a high number of partitions. In contrast, say your partition key would be a document type. Typically systems have a fixed number of types (users, messages, configuration, events, logs, etc.) that might grow slowly, you have a low number of partitions, and the corresponding optimisations do not kick in. You should not use partitioning for the latter case.

- The number of documents and the total amount of data stored in each partition needs to comfortably fit into a single shard. In practice you want to store 100s or 1000s and more partitions on a single shard.