Understanding Parallel Preads in CouchDB posted Friday, July 11, 2025 by Jan Lehnardt CouchDBinternalsrelease

CouchDB 3.5.0 has been released and it comes with an exciting new feature: Parallel Preads. But what does that mean? To understand them, we have to dive deep into the core of CouchDB and learn how it works before we find out how it is working even better now.

What is a P-Read Anyway?

At the lowest level, CouchDB, like any other database, operates on POSIX

files, that is files in a file system with a standard API to open, read and

close files as needed. A P-Read, or pread is a read from a specified

position in the file. This is a single operation as opposed to using two steps,

a seek to go to a position in the file and then reading from there.

// open file for reading

const fileHandle = await fs.open('filename', 'rw')

// read the first 4096 bytes from disk

// on a newly opened file, `read` starts

// reading at position `0`

const length = 4096

const firstFourK = await fs.read(fileHandle, length)

// read the second 4096 bytes from disk using

// two steps, first `seek` and then `read`

const seekTo = 4096

fs.seek(fileHandle, seekTo)

const secondFourK = await fs.read(fileHandle, length)

// read the third 4096 bytes using a single

// pread operation to specify the start and

// length of bytes we want to read

const startPosition = 8192

const thirdFourK = await fs.pread(fileHandle, startPosition, length)

// close the file handle when we are done

close(fileHandle)That doesn’t look very fancy, why is this feature remarkable then? The Parallel is where it gets interesting. Like most other databases, CouchDB lets multiple clients send requests at the same time. In fact, CouchDB is particularly good at this. It can handle thousands and tens of thousands of clients at the same time reading and writing documents and views and replicating and all the other things CouchDB supports.

If all of these request happen to occur sequentially, there is no problem reading and writing the database file.

However, when reads and writes occur at the same time (or interleave), then writes have to wait for reads to complete, and reads and more writes have to wait for writes to complete. Multiple reads can still happen in parallel.

Strategies for Safe Parallelism

One strategy to make this safe is a strategy called multi-version-concurrency-control (or MVCC). It means that instead of updating a single record with new data, when an update is issued, instead a wholly separate new version of the record is written into a different part of the database file.

One consequence of that is if 100s of clients are reading the current version and then a new version is being written, the write can happen independently from reads and does not have to wait for all of them to finish first. At the same time, the write also doesn’t have to lock the record for updating and thus block any future readers from accessing it. It can all happen at the same time. All clients can make progress and throughput can be very high.

The second strategy to ensure safety in this setup is the single-writer principle. While many clients can read from the database in parallel, only a single process is allowed to write. Another term for this is concurrent reads / serialised writes. CouchDB is written in Erlang and Erlang is built around a lightweight process model and it is used here to implement this principle: each client is handled by its own process that is completely isolated from other processes and all of these processes can run — and on multi-core CPUs actually do run — in parallel.

However, all clients that want to insert new data or update or delete existing

data in a CouchDB database have to share a single update process. It acts as a

queue to batch up writes for efficiency as well as safety that only it controls

when new bytes are written to disk.

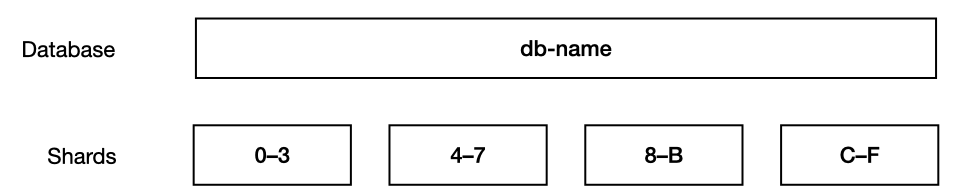

One downside of this model is that all writing happens on a single Erlang

process which in turn means all writes are running at most at the speed of a

single CPU core. CouchDB counteracts this downside by database sharding: using

multiple database files for a single logical database where each one of them

gets its own updater process.

In that setup updates can happen in parallel, just on separate files. Sharding

comes with other trade-offs that exceed the scope of this article, but there are

downsides to, say use 16 (a lot) shards for a 10GB (small) database. CouchDB

recommends using 1–2 shards for this. But that means that a database of that

size will never support more writes than 1–2 update process(es) can handle.

The limit for this is very high, so for most moderate and medium uses of

CouchDB, this is not a factor, but when there are a lot of concurrent requests,

the limit can be felt.

The final piece of the puzzle that we need to understand the new parallel preads feature is how Erlang represents file descriptors (fds). Fds are a handle provided by the operating system kernel when opening a file. You can use fds to read and write and seek inside a file. Inside of Erlang, an fd gets bound to a single Erlang process.

That means that below the layer of concurrent processes for reads and serialised

update processes for writes sits another single process per database shard

file that receives all read and write operations. Creating yet another

serialised queue. Sharding again helps with this, but as outlined before, it is

not a panacea.

Parallel Preads

For the way CouchDB uses files, there is no intrinsic need for tying a file

descriptor to a single managing process. It just happens to be the default

behaviour of Erlang’s built-in file module. In fact, it is the only behaviour

and in most cases it is a good idea for various safety reasons to keep it that

way.

But CouchDB is not most cases and a non-default behaviour of the file module can

be advantageous: Enter couch_cfile: a clone of Erlang’s built-in file module

without the limitation of a single controlling process. With couch_cfile

underlying a CouchDB database’s shard file, it will allow multiple parallel read

requests to get their own (dup()’d) file descriptor for truly

independent reads and writes. The single-writer principle continues to be

employed, but now reads don’t have to wait for writes and vice versa even at the

lowest level in CouchDB.

It should be clear that instead of using a single channel for operations using multiple ones is better, but how exactly is this better? Or rather: in what scenarios should you see an improvement when using CouchDB? First up, if you have low volumes of traffic, in the tens or even hundreds of average sized requests per second on recent hardware with a speedy SSD or NVME store, you probably do not see much of a difference. But with more concurrency, or large batch writes or high-latency storage, things can look very different. Let’s dive into those scenarios.

Large Batch Writes

When writing large batches of data into CouchDB, say during a nightly import,

the fd managed by the file module will spend most of the allocated time and

IO bandwidth servicing those large batch writes. In numbers that’s many requests of

1000+ documents, especially when the documents are larger than 10k each. While

this is going on, any read request will at least have to wait for the time it

takes to write a single batch to disk.

Fsync

As we have shown elsewhere, CouchDB employs the fsync()

system call to flush data to disk safely after each write. Depending on the

storage system, this is an operation that can take a little while. And just like

with the large batch scenario before, any reads issued at the same time will

have to wait for the fsync() to finish before the reads can be serviced,

adding random latency spikes.

High-Latency Storage

This is just a fancy term for “attached block storage”, a concept that makes abundant storage available to a host over a (admittedly very fast) network. All cloud providers offer such a service (EBS, Volumes, Block Storage). They all have the same problem: a network adds latency and even a few single digit milliseconds can make all the difference for any database, but especially CouchDB. For both of the scenarios, large batch writes and fsyncs, the penalty is even worse if the underlying disk system adds even more latency and thus ultimately curbs concurrency and throughput.

Safety

CouchDB’s #1 promise to its users is that it keeps its data safe and the team would not make such a fundamental change to the core of how it handles storing and accessing data without also making sure it remains safe.

For more details on this, see our our three part series on how CouchDB prevents data corruption:

To that end, the CouchDB team implemented a property test suite to

make sure the couch_cfile module behaves exactly like Erlang’s file

module. Property testing allows the CouchDB developers to describe a range of

possible inputs into a module or function and the test suite will generate many

thousands of pieces of random sample data and ensure that both modules behave

exactly the same.

Numbers

Now we understand the underlying reason for this feature and we have an idea how this makes CouchDB more efficient in a safe way. But how much faster is this in practice?

For this, the CouchDB devs have run a number of benchmarks:

-

Sequential Operations: this benchmark is explicitly designed to not highlight any advantages. It just repeats a number of read requests to certain CouchDB endpoints in quick succession. The goal here is to show that even in the worst case scenario for this feature, CouchDB performance is no worse than before:

- random document reads: 15% more throughput

- read

_all_docs: 15% more throughput - read

_all_docswithinclude_docs=true: 25% more throughput - read

_changes: 4% more throughput - single document writes: 8% more throughput

So far so good. Any gains here are likely to be attributed to bypassing the controlling Erlang process.

-

Concurrent document reads: this benchmark shows that having a distinct

fdper reading client yields better performance even before we interleave any writes. For this scenario, we set up a 12 node CouchDB cluster and had 2000 clients concurrently requesting random documents as fast as they could.The result: 30% more throughput.

-

Constant batched writes with concurrent reads: for this, we set up a single node CouchDB instance with a single shard database and had it receive constant batched writes of documents while reading a single document concurrently as fast as possible.

The result: 40% more throughput.

Conclusion

Removing a single process bottleneck at the core of how CouchDB manages data yields impressive performance gains across the board, even for scenarios that this new feature was not designed for.

The CouchDB developers have done a lot to make sure this feature is safe and as such it is enabled by default in CouchDB 3.5.0, so your setup gets faster by just upgrading, with no further tuning.

The one caveat for all this is: this feature is not available on Windows.

« Back to the blog post overview