Resource Locking with CouchDB and Svelte posted Wednesday, January 15, 2025 by Alex TipCouchDBData

This part four of our blog post series on building a real-time, multi-user Kanban board with CouchDB and Svelte. As mentioned in the previous post, UI or resource locking is one of the most promising mechanisms for avoiding conflicts in the first place: if a card is locked the moment Alice starts editing it, then Bob won’t be able to do anything with it, and therefore won’t be able to introduce a conflict. Sounds like we could have just done this first and not bothered with all the conflict handling in the previous posts! But again, it’s not quite as simple as it seems.

The app exists on GitHub, and you can follow along as we add features by checking out different tags. This post covers step 4. Note that we’re adding a backend component with a corresponding repo later in the post.

When to Lock and when to Unlock

Let’s go through some locking scenarios:

- A card should lock for all other users when a user starts dragging or editing it

- uh… that’s actually it

Now let’s go through some unlocking scenarios. A card should unlock when:

- It is dropped after dragging

- Editing is completed by saving

- Editing is cancelled by clicking cancel

- Editing is cancelled by clicking ”edit” on a different card

- The user navigates away within our hypothetical app and the board component unmounts

- The user closes the tab

- The user closes their browser

- The user slams shut their laptop because they’re late for a meeting 🤔

- The user loses connection because the train they’re on has just barrelled into a tunnel at 250kph 🙈

- The user loses connection to the server for any reason at all 😰

We can handle all of the happy path scenarios up to and including ”The user navigates away within our hypothetical app” with purely frontend code, but beyond that, we need the server to keep track of the connections. There’s simply no guarantee that the client will be able to detect the remaining scenarios and fire off a ”I’m about to disappear, unlock all my stuff quick aaaagh”-type of request in time. As much as I enjoy writing CouchDB-based apps with as little server-side code as possible, this is a point where I must concede that there is no sensible alternative. If we’re doing resource locking, we must cover the unexpected disconnection scenario properly, otherwise it’ll just be a horrible mess.

One of the least complex methods of doing this involves a small http server that listens to long poll connections. This is fine since we’re not actually sending data back and forth in the request, it’s purely there to signal an online presence. When a client connection isn’t restarted after it ends, or it disconnects prematurely, the server should remove all locks associated with that client. This should take care of all the unexpected disconnection scenarios. We need somewhere to store the locks, and while something like redis would generally be a good choice for this kind of job, we already have a CouchDB, so in the spirit of pragmatism, we’re just going to use that.

As for the locking, we will do that from the client, since we just decided we’re using CouchDB for storage and we’re already set up to write and receive data with it.

Let’s deal with locking first.

How to Store a Lock

For this to work, we need to store either:

- Whether an card is being worked on and by whom, or

- Who is working on which card

Option 1 would require us to extend the Card document by a lockedBy property that would take a user name or id as a key. However, this would add more write opportunities to the very document type we’re trying to avoid having more writes on, so this is a bad idea. We don’t want to build a locking mechanism that can cause more conflicts.

Option 2 would mean having a document per user in the CouchDB, each with a currentlyLocking key that takes another document’s _id. This has the advantage that locking any document is independent of that document, and does not perform any extra writes on it. In addition, each user would only be writing to their own user docs, meaning we would never have to worry about conflicts when writing to them. This option sounds a lot more sensible.

However, if two users can cause a conflict trying to edit the same document, the same is, annoyingly, also true when they’re trying to lock it. Since we’d be storing the locks in documents-per-user, we can’t actually cause a real conflict, but we could have two users locking the same document. The time window for this occurring is comparatively small, but it’s still an issue. Also, trying to prevent this then requires us to read all user documents, which is also bad. And, on top of that, supporting multiple locks at once (user drags a card while editing another) is pretty complicated. So this option has become quite unattractive as well.

But lo! We have a more couchy third option, and yes, it’s another tiny document with a deterministic _id that references a card! Each card gets their own lock document:

type Lock = {

type: 'lock'

_id: string // `lock-${card._id}`

locks: string // `_id` of the target document

lockedBy: string // username

lockedAt: string // ISO datetime string

}These lock documents will arrive with the _changes feed like all other documents, and we group them into their own array like we did the others data types. When we render a card, we check whether it has a lock, and prevent interactions with CSS using pointer-events: none;. We display the name of the locking user by referencing a data attribute in a pseudo-element’s content (relevant lines only):

{#each cardsInColumn as card (card._id)}

{@const lock = locks.find((lock) => lock.locks === card._id)}

<div

class="card"

class:locked={lock}

data-lockedby={lock?.lockedBy}

>…</div>

{/each}.card.locked {

pointer-events: none;

}

.card.locked:before {

content: attr(data-lockedBy);

}If someone else somehow manages to request a lock for the same document, that will fail with a 409, because the lock document already exists, and we can then prevent the editing attempt. In this case, causing the conflict is actually helpful and makes implementing our locks easier.

That all sounds very good. So here’s how the locking will work: Whenever our user starts to edit or drag a card, we call our new lock() function and await whether it returns true or false. If it returns false, we couldn’t get a lock, presumably because one already exists, and we can cancel the edit attempt.

async function lock(docId: string) {

const lock: PouchDB.Core.PutDocument<Lock> = {

_id: `lock-${docId}`,

type: "lock",

locks: docId,

lockedAt: new Date().toJSON(),

lockedBy: currentUserName,

}

let lockAchieved = false

try {

const lockResponse = await db.put(lock)

if (lockResponse.ok) {

lockAchieved = true

}

} catch (error) {

if ((error as PouchDB.Core.Error).status === 409) {

// Maybe pop up message about who locked it

}

}

return lockAchieved

}That’s locking sorted then.

To unlock, we just delete the lock document. Since you can’t lock a locked card until after it has already been unlocked again on your device, there is no potential for additional conflicts from the deletion. Since deletions also propagate through the _changes feed, all clients can then unlock the card UI.

Unlocking: The Happy Path

As we already established, there are a couple of scenarios where we must unlock a card from the client side:

- It is dropped after dragging

- Editing is completed by saving

- Editing is cancelled by clicking cancel

- Editing is cancelled by clicking ”edit” on a different card

We can easily identify all of these points in the code and call our new unlock() function from there:

async function unlock(docId: string) {

if (!docId) return

const _id = `lock-${docId}`

const lockDocument = locks.find((lock) => lock._id === _id)

if (!lockDocument) return

try {

await db.put({ ...lockDocument, _deleted: true })

} catch (error) {

console.log("unlock error", error)

}

}We could also handle ”The user navigates away within our hypothetical app and the board component unmounts” from the client side, but honestly, why bother. This is functionally equivalent to disconnecting from our long polling endpoint, so we might as well handle that scenario there and avoid a little extra work.

Unlocking: The Unhappy Path

Right, after three and a half blog posts, it’s finally time to write a bit of server code. We need a small server that keeps track of long poll connections per user, and cleans up their locks if they disappear.

Here’s what we’re going to do, in hopefully about 100 lines of node.js:

- write a small server with

hapi - it will have a single endpoint called

/onlineuser, and in lieu of a proper auth system in this demo, that endpoint takes a query param with the user name1 - for each user that hits that endpoint, a 30 second timeout (

longpoll) will start in order to keep the connection alive- if the client disconnects during that time, we find and delete all their locks in the CouchDB, and cancel their

longpoll - if the

longpolltimeout runs its course, we start and store a second timeout (wait) to wait for the user to reconnect (restart the long poll request)- if this

waittimeout runs its course, we find and delete all of the user’s locks in the CouchDB

- if this

- whenever a user hits the endpoint, we check whether we already had a

waittimeout for them, and if so, cancel it and remove our reference to it

- if the client disconnects during that time, we find and delete all their locks in the CouchDB, and cancel their

The code isn’t particularly exciting, so we’re not going to look at most of it. The most interesting bit is that we’re now going to have to query CouchDB to find the lock documents belonging to the disconnected user. To do this, we’re going to use an index and a Mango query with PouchDB find (just showing select lines without error handling etc.):

// After instantiating our DB, we build an index to speed up our queries:

db.createIndex({

index: { fields: ['lockedBy'] }

});

// In our delete function, we find the user’s lock documents

async function deleteLocks(username) {

const locks = await db.find({

selector: {

lockedBy: username

}

});

// Then set them to deleted

const docsToDelete = locks.docs.map(lock => {

return { ...lock, _deleted: true }

})

// And them write them back to the CouchDB

await db.bulkDocs(docsToDelete)

}Since the lock documents are the only ones that have a lockedBy field, this is good enough. This will give us all lock documents belonging to that user, and once we have those, we can delete them by adding _deleted: true to them.

The rest of the backend code lives in this repo. Our frontend repo at the tag step-4 and beyond expects this backend to be running.

Once we have this in place, all we need to do is subscribe to the long polling endpoint from the client whenever the Kanban board mounts, and unsubscribe whenever it unmounts. To declutter our <Board> component, we’ll put this in the existing <UserInfo> component (which was where our users could set their username), and also have it deal with being extremely online.

The <UserInfo> Component

We’re adding the ability to long poll our backend’s only route, /onlineuser. For this, it needs the current username, which this component conveniently already has. All this really needs to do is request the route, and when the request ends, call it again. For this, we’ll make a subscribe function that gets called once in onMount, and then calls itself again once the request returns.

If the request fails, we wait for a while and then try again.

Performance Impact of Locking

You will now have, at worst, twice as many lock documents as you have users that are currently doing something (each user can lock two cards at once, editing one while moving another). The documents are tiny, so their disk and memory impacts are negligible. We do now use a secondary index and a query in order to find each user’s locks when we want to delete them.

You will be firing off more requests and writing to the database more often: at least three writes for every change instead of one. On top of that, simply clicking ”edit” without actually changing anything will already cause two writes, one for the lock and one for the eventual unlock. However, these won’t cause conflicts themselves, and will prevent conflicts on other documents, so this seems like a worthwhile trade-off.

You also now need to write, deploy and manage some server code, but in a proper, large-scale app, this is probably unavoidable anyway. Since CouchDB is a http server that also handles users, authentication and role-based access management to a degree, it’s tempting to think you can do entirely without server code, but realistically that’s usually not the case. You’ll just need a lot less of it.

We’ve said this about a bunch of things in this blog post series, but: there isn’t one silver bullet for avoiding conflicts. Your app may well have characteristics that make this locking approach utterly implausible. You can’t really add locks to a collaborative text editor, for example. As with most things in programming, the answer to ”should I be doing this?” is ”it depends”. The aim of these posts is not to answer these questions for you, but to put you in the right frame of mind to be able to answer them yourself, for your individual situation.

You’re stacking features on top of each other in the hope of ending up in an ideal situation of ”zero conflicts, ever”. You are likely not going to reach this ideal situation. It’s up to you to decide how close you want to get, and how much effort that’s worth to you2.

Note that this mechanism will be much less useful in an offline first app. If you’re not connected to the internet, you can’t transmit the lock to the other users, so you have to rely entirely on other means to prevent and resolve conflicts.

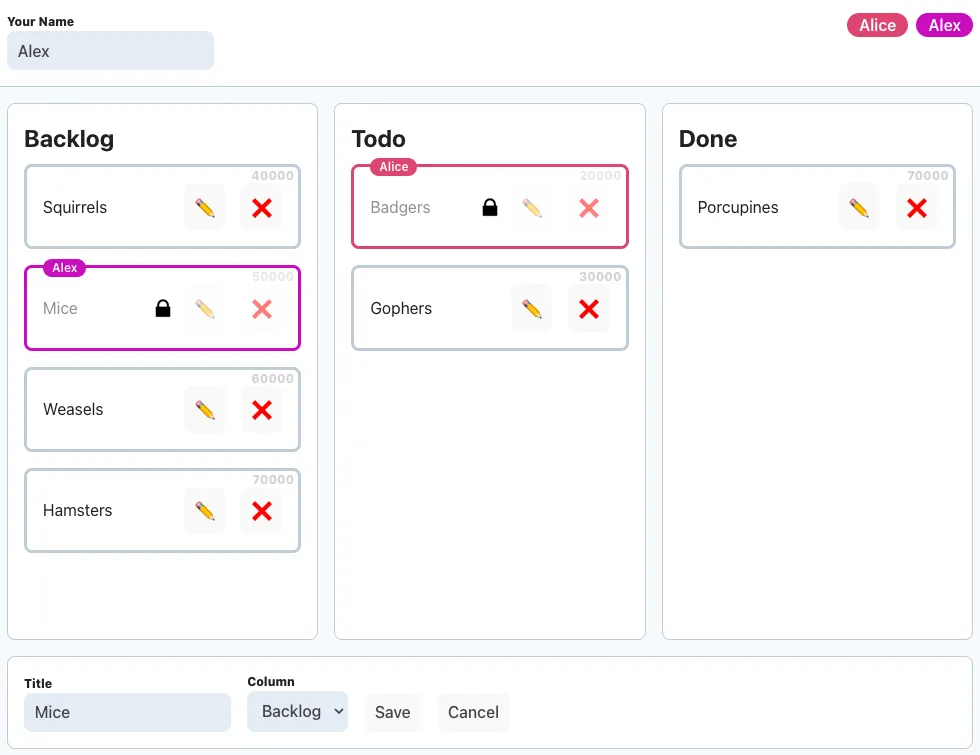

Small Bonus Feature: Avatar Stack

Before we wrap up, let’s use the opportunity to add one more mini-feature: an avatar stack that shows all online users. To make this nicer, we’ll also assign a color to each user when they go online, and highlight their locked cards in that color. That way, everyone can see what everyone else is working on. To do this, we need to store the online users:

export type OnlineUser = {

type: 'onlineUser'

_id: string

name: string

color: string

}This doc is written whenever the <Board> component mounts. It is deleted by the same server code that deletes the locks.

We receive the onlineUser docs the same way we do all the others, and also sort them into their own array. Now we extend our <UserInfo> component to receive that array as a prop, and show a list of all online users.

The <Card> component gets a tiny update to also receive the online users as a prop. It can now find out the color assigned to the user that locked the current card, and show a border in that color (relevant lines only):

<script>

// Card props

export let locks: Lock[] = []

export let card: Card

export let onlineUsers: OnlineUser[] = []

// Find the lock for this card

$: lock = locks.find((lock) => lock.locks === card._id)

// Find the user for that lock

$: lockingUser = onlineUsers.find((user) => user.name === lock?.lockedBy)

</script>

<div

class="card"

style:border-color={lockingUser?.color && lockingUser?.color}

>…</div>And there we are:

Conclusion

We’ve arrived at a state I’d be happy to call ”a solid little app”:

- We’ve got a drag-n-drop Kanban board that’s multi-user and shows changes in a real-timeish manner.

- It’s quite resilient in terms of avoiding conflicts, and provides automatic and manual methods of resolution when conflicts do occur.

- It never destroys other users’ data, and helps the current user avoid losing theirs. No last write wins anywhere.

- It provides a locking mechanism that cleans up loose ends and never leaves cards in an uneditable limbo state.

- It nicely visualises who’s online and working on what.

- It relies heavily on CouchDB features and only adds the simplest-possible technologies when absolutely necessary (the small longpoll server).

- The data model is fairly simple and closely aligned with the UI, with no additional levels of abstraction in between. We’re not adding a large overhead of logic or infrastructure to manage conflicts, and we’re not transforming our straightforward data model into a G-Set of LLW-Maps or similar event-based patterns.

In terms of traditional ”always-online” app design, this is pretty great, and a testament to how much you can do with ”just” basic CouchDB and standard web technologies. You could add authentication and role-based access management with barely any additional tech (although you would need to send emails, CouchDB alone can’t do that for you) and very little code, since CouchDB provides those as well.

That being said, we’ve used tools that are fitting for the problem. I would not recommend you build a collaborative rich text editor this way, for example. If you wanted to display the live cursor positions of everyone involved, sockets and a more ephemeral data store would be a good choice. Once again, it depends on what your goals are.

So where can we go next? We can:

- Implement an impartial audit trail, to track who did what and in which order

- Make the app truly offline first, meaning: there is no direct communication with the remote CouchDB, all data is written to a local, client-side PouchDB and then opportunistically synced to the CouchDB, and then on to all other clients. This app can be used when offline, but now the realities on the various clients and the server can diverge much further than in our current implementation: clients might be offline for extended periods of time and rack up dozens or hundreds of changes before they can sync again. Can we make our conflict resolution mechanisms deal with this while still making sense to the users? What happens to locking when you’re offline? Which further data structure and UI improvements could we make to reduce the probability of conflicts? There’s lots more to explore here.

But for now, we must come to an end. See you soon for part 5.

Footnotes

-

Apocryphal, but relevant: online retailers with limited stock generally don’t want to end up in situations where they sell the last item in stock multiple times. However, the speed of light being what it is, avoiding this situation entirely is physically impossible. The pragmatic approach is reducing the probability of the problem occurring as far as financially sensible, and giving everyone who does end up affected a discount code. ↩